In the rapidly evolving landscape of search technology, AI-powered search engines like Perplexity AI have emerged as game-changers. These systems go beyond traditional keyword matching to provide contextually relevant results and AI-generated summaries. This article explores how to build a similar system using SWIRL, an open-source framework that combines the power of large language models with flexible search capabilities.

Understanding AI-Powered Search

Traditional search engines like Google rely primarily on keyword matching and link analysis. Modern AI-powered search engines, however, leverage large language models (LLMs) to:

- Understand natural language queries

- Process and analyze search results

- Generate coherent summaries

- Provide contextual responses with citations

SWIRL: An Open-Source Alternative

SWIRL (Semantic Web Information Retrieval Layer) is an open-source framework that allows developers to build Perplexity-like search experiences. It combines traditional search capabilities with AI-powered features, making it an excellent foundation for building advanced search applications.

Key Features

- Natural Language Processing

- Multi-source search capabilities

- AI-powered summarization

- Result re-ranking

- Source citation

- Docker-based deployment

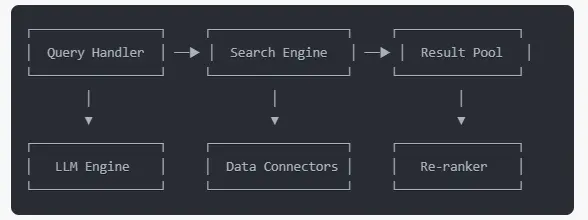

Technical Architecture

Core Components

Implementation Layers

- Query Processing Layer

- Natural language understanding

- Query parsing and optimization

- Context extraction

- Search Layer

- Multiple search provider support

- Parallel query execution

- Result aggregation

- AI Processing Layer

- Result summarization

- Content generation

- Source verification

- Presentation Layer

- Result formatting

- Citation management

- User interface

Setting Up Your Own Instance

Prerequisites

- Docker and Docker Compose

- OpenAI API key

- Basic understanding of containerization

Step-by-Step Implementation

1. Initial Setup

# Download the Docker configuration

curl https://raw.githubusercontent.com/swirlai/swirl-search/main/docker-compose.yaml -o docker-compose.yaml# Set environment variables

export MSAL_CB_PORT=8000

export MSAL_HOST=localhost

export OPENAI_API_KEY='your-OpenAI-API-key'

2. Launch the Container

# For MacOS/Linux

docker-compose pull && docker-compose up

# For Windows

(PowerShell) docker compose up

3. Configure Search Sources

- Access admin panel at http://localhost:8000

- Default credentials: admin/password

- Configure search providers and connectors

Extending Functionality

Adding Custom Data Sources

class CustomDataConnector:

def __init__(self, config):

self.config = config

async def search(self, query):

# Implement custom search logic

results = await self._fetch_results(query)

return self._process_results(results)

Implementing Custom Re-ranking

class CustomReRanker:

def __init__(self, model):

self.model = model

def rerank(self, results, query):

scores = self._calculate_relevance(results, query)

return sorted(results, key=lambda x: scores[x.id], reverse=True)

Advanced Features

1. Enhanced Result Processing

- Semantic similarity scoring

- Entity recognition

- Cross-reference verification

2. Custom Plugins

- Domain-specific processors

- Custom ranking algorithms

- Specialized data connectors

3. Performance Optimization

- Result caching

- Query optimization

- Resource management

Best Practices and Considerations

Security

- API Key Management

- Use environment variables

- Implement key rotation

- Monitor usage

- Access Control

- Implement role-based access

- Set up authentication

- Log access patterns

Performance

- Query Optimization

- Implement caching

- Use parallel processing

- Optimize resource usage

- Resource Management

- Monitor system resources

- Implement rate limiting

- Scale horizontally when needed

Deployment Considerations

Production Environment

- Infrastructure

- Load balancing

- High availability

- Monitoring and logging

- Scaling

- Container orchestration

- Resource allocation

- Database scaling

Maintenance

- Updates

- Regular security patches

- Feature updates

- Dependency management

- Monitoring

- Performance metrics

- Error tracking

- Usage analytics

Future Enhancements

- Advanced AI Features

- Multi-modal search

- Conversational interfaces

- Context awareness

- Integration Capabilities

- API expansion

- Third-party integrations

- Custom workflow support

Conclusion

Building a Perplexity-like search engine with SWIRL provides a powerful foundation for creating advanced search experiences. By combining traditional search capabilities with AI-powered features, organizations can create sophisticated search solutions tailored to their specific needs.

The open-source nature of SWIRL allows for extensive customization and enhancement, making it an excellent choice for organizations looking to build their own AI-powered search solutions. As the technology continues to evolve, the possibilities for extending and improving these systems will only grow.

Resources

Learn More: