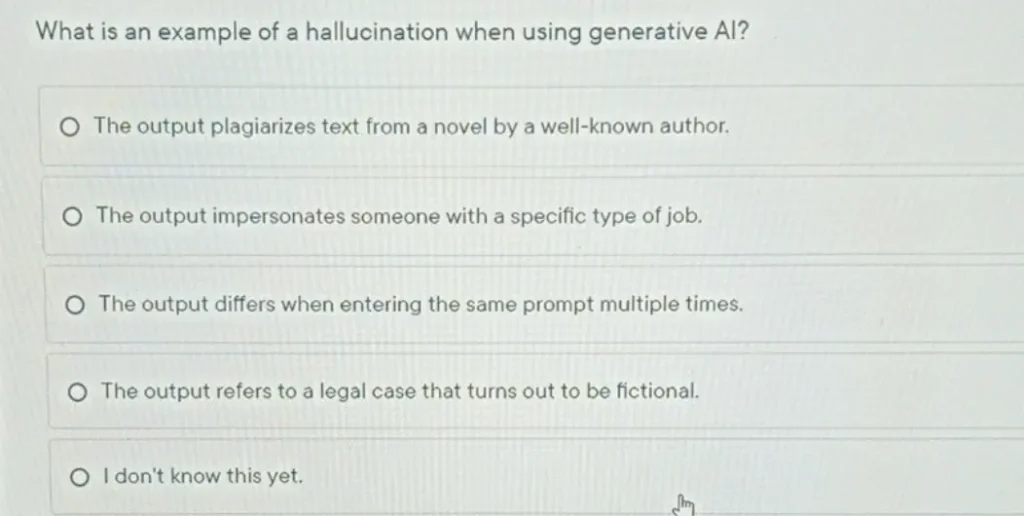

Fdaytalk Homework Help: Questions and Answers: What is an example of a hallucination when using generative Al?

a) The output plagiarizes text from a novel by a well-known author.

b) The output impersonates someone with a specific type of job.

c) The output differs when entering the same prompt multiple times.

d) The output refers to a legal case that turns out to be fictional.

e) I don’t know this yet.

Answer:

First, let’s understand what a hallucination is in the context of generative AI:

- A hallucination occurs when an AI generates information that is false, nonsensical, or not based on real data.

Now, with this understanding, let’s analyze each given options to determine which one best fits this definition.

Given Options: Step by Step Answering

a) The output plagiarizes text from a novel by a well-known author.

- This is not an example of a hallucination. Plagiarism is not a hallucination. It’s copying existing text, not generating false information.

b) The output impersonates someone with a specific type of job.

- This is also not an example of a hallucination. Impersonation refers to the act of pretending to be someone else, which is different from fabricating false information.

c) The output differs when entering the same prompt multiple times.

- Different outputs for the same prompt are not hallucinations. This is expected behavior due to the probabilistic nature of generative AI.

- Generative AI can produce different outputs for the same prompt due to its probabilistic nature.

d) The output refers to a legal case that turns out to be fictional.

- This is an example of a hallucination. Referring to a fictional legal case that doesn’t exist in reality is a clear example of a hallucination.

e) I don’t know this yet

- We’ll consider this if none of the other options seem correct.

Conclusion

Based on the above analysis, the correct answer is:

d) The output refers to a legal case that turns out to be fictional

This is a hallucination because the AI has generated information about a legal case that doesn’t actually exist, creating false or fictional data as if it were real.

Learn More: Fdaytalk Homework Help

Q. Why is a cloud environment crucial for generative Al to work?