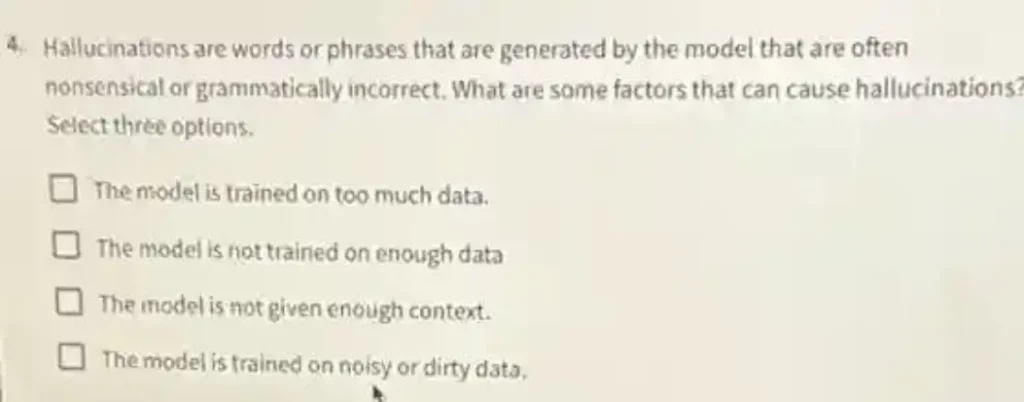

Fdaytalk Homework Help: Questions and Answers: Hallucinations are words or phrases that are generated by the model that are often nonsensical or grammatically incorrect. What are some factors that can cause hallucinations? Select three options.

a) The model is trained on too much data.

b) The model is not trained on enough data.

c) The model is not given enough context.

d) The model is trained on noisy or dirty data.

Answer:

First, let’s understand what hallucinations are in the context of language models:

- Hallucinations are instances where the model generates information that is incorrect, nonsensical, or not based on its training data. These issues can arise from several factors related to how the model is trained and used.

Now, with this understanding, lets solve this problem.

Given Options: Step by Step Answering

a) The model is trained on too much data.

- Training on a large amount of data, in itself, does not typically cause hallucinations. In fact, having more data generally helps the model learn better representations which usually leads to better performance. Therefore, this is unlikely to be a primary cause of hallucinations.

b) The model is not trained on enough data.

- If a model is trained on insufficient data, it may not learn the necessary patterns and structures of the language, leading to poor performance and hallucinations. This can indeed be a factor.

c) The model is not given enough context.

- When generating text, a model requires sufficient context to produce coherent and relevant responses. Lack of context can lead to irrelevant or nonsensical outputs. This is also a likely factor.

d) The model is trained on noisy or dirty data.

- Noisy or dirty data can contain errors, inconsistencies, or irrelevant information. Training on such data can lead to models learning incorrect patterns, resulting in hallucinations. This is another likely factor.

Conclusion

Based on the above analysis, the three options that are most likely to cause hallucinations are:

- b) The model is not trained on enough data

- c) The model is not given enough context

- d) The model is trained on noisy or dirty data

Learn More: Fdaytalk Homework Help

Q. Why is a cloud environment crucial for generative Al to work?